As online communities and activities grow, maintaining the integrity and safety of their online users is becoming a must. In this tutorial we will explore the innovative solution of using Artificial Intelligence (AI) for image moderation. We will explore how Social+ utilizes AI image moderation to scan and moderate images for inappropriate or offensive content before they are published.

We will guide you through the process of enabling and disabling this feature, and discuss how to set the confidence level for each moderation category to ensure optimal results. Furthermore, we will address frequently asked questions to provide a comprehensive understanding of this technology. Join us as we navigate the future of online safety with AI-powered image moderation.

Pre-requisites

Before we dive into the steps, ensure you have the following:

- Access to the Social+ Console

- An app instance integrated with your platform (Could use our UI Kits as a sample project)

Note: If you haven’t already registered for an Social+ account, we recommend following our comprehensive step-by-step guide on how to create your new network.

Understanding Image Moderation

Image moderation is a feature that scans and moderates all uploaded images on posts and messages for inappropriate, offensive, and unwanted content before they are published. This is achieved by partnering with Amazon’s Rekognition AI technology, which detects and moderates images that contain violence, nudity, suggestive, or disturbing content. This allows you to create a safer online community for your users without requiring any human intervention.

Enabling Image Moderation

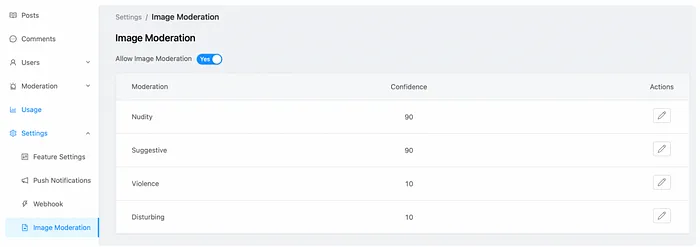

By default, image moderation is disabled. To enable it, log into the Social+ Console, go to Settings > Image Moderation, and toggle “Allow Image Moderation” to “Yes”. Once enabled, you will need to set the confidence level for each moderation category.

Setting Confidence Levels

Confidence levels are crucial in determining the accuracy of image moderation. The four categories available are nudity, suggestive, violence, and disturbing.

- Nudity: This category scans for explicit or implicit nudity in the images.

- Suggestive: This category scans for images that may not contain nudity but are suggestive in nature.

- Violence: This category scans for images that depict violent acts or situations.

- Disturbing: This category scans for images that may be disturbing or unsettling to some users.

By default, the confidence levels set are “0” for each category. Allowing any one category to be set to ‘0’ confidence level will likely result in all images being blocked from being uploaded, regardless of whether the image contained any inappropriate elements.

Setting confidence levels at a higher threshold is likely to yield more accurate results. If you specify a confidence value of less than 50, a higher number of false positives are more likely to be returned compared to a higher confidence value. You should only specify a confidence value of less than 50 only when lower confidence detection is acceptable for your use case.

Disabling Image Moderation

To disable image moderation, simply toggle “Allow Image Moderation” to “No”. Any images uploaded will no longer go through the image recognition service, and any inappropriate content will no longer be detected.

Understanding the Outcome

What happens when an uploaded image is detected to have unwanted imagery? It depends on the confidence threshold set in Social+ Console for each moderation category. If the confidence score returned by Amazon Rekognition equals to, or is higher than the confidence levels set in Console for that category, Social+ will block this image from being uploaded.

Let’s consider two use cases:

1. Use Case 1: If your settings are (“Nudity: 99; Suggestive: 80; Violence: 10; Disturbing: 50”), and the Amazon Rekognition Confidence Level for an uploaded image is “Nudity: 80, Suggestive: 85; Violence: 5; Disturbing: 49”, the image will be blocked because the confidence score returned for “Suggestive” category is higher than the confidence threshold set on Social+ Console.

2. Use Case 2: If your settings are (“Nudity: 99; Suggestive: 80; Violence: 10; Disturbing: 50”), and the Amazon Rekognition Confidence Level for an uploaded image is “Nudity: 80, Suggestive: 79; Violence: 5; Disturbing: 49”, the image will not be blocked because the confidence levels returned for all the categories are neither equal to nor higher than the confidence thresholds set in the Social+ Console.

In general, it's recommended to have a set of images that should either be allowed or blocked. This way, you can adjust the confidence level mix based on your specific use case.

Final Thoughts

AI for image moderation is a game-changer in creating safer online communities. By leveraging Image moderation in Social+, you can ensure that all images uploaded to your network are scanned and moderated for inappropriate content. Remember, the key to effective image moderation lies in setting the right confidence levels for each moderation category. With this technology at your fingertips, you can create a more secure and inclusive online environment for all users.